In the previous two posts I gave a definition of stochastic integration. This was achieved via an explicit expression for elementary integrands, and extended to all bounded predictable integrands by bounded convergence in probability. The extension to unbounded integrands was done using dominated convergence in probability. Similarly, semimartingales were defined as those cadlag adapted processes for which such an integral exists.

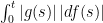

The current post will show how the basic properties of stochastic integration follow from this definition. First, if is a cadlag process whose sample paths are almost surely of finite variation over an interval

, then

can be interpreted as a Lebesgue-Stieltjes integral on the sample paths. If the process is also adapted, then it will be a semimartingale and the stochastic integral can be used. Fortunately, these two definitions of integration do agree with each other. The term FV process is used to refer to such cadlag adapted processes which are almost surely of finite variation over all bounded time intervals. The notation

represents the Lebesgue-Stieltjes integral of

with respect to the variation of

. Then, the condition for

to be

-integrable in the Lebesgue-Stieltjes sense is precisely that this integral is finite.

Lemma 1 Every FV process

is a semimartingale. Furthermore, let

be a predictable process satisfying

(1) almost surely, for each

. Then,

and the stochastic integral

agrees with the Lebesgue-Stieltjes integral, with probability one.

Proof: First, for bounded predictable integrands, the stochastic integral can simply be defined as the Lebesgue-Stieltjes integral on the sample paths. This clearly agrees with the explicit expression for elementary integrands and, by the bounded convergence theorem, satisfies bounded convergence in probability, as required.

Now, suppose that the predictable process satisfies (1), so it is integrable with respect to

in the Lebesgue-Stieltjes sense. If

is a sequence of bounded predictable processes tending to a limit

then, by the dominated convergence theorem for Lebesgue integration, the following limit holds

with probability one and, therefore, also under convergence in probability. Choosing shows that

. Then, choosing

shows that the Lebesgue-Stieltjes integral

agrees with the stochastic integral. ⬜

Next, associativity of integration can be shown. This is easiest to understand in the differential form, in which case, equation (2) below simply says that .

Theorem 2 (Associativity) Suppose that

for a semimartingale

and

-integrable process

. Then,

is a semimartingale and a predictable process

is

-integrable if and only if

is

-integrable, in which case

(2)

Proof: That is a semimartingale, and equation (2) is satisfied for bounded

has already been shown in the previous post, in the proof of existence of cadlag versions of integrals. Now, suppose that

, and choose a sequence of bounded predictable processes

tending to a limit

. As

is dominated by the

-integrable process

,

in probability, as . Taking

gives zero for the right hand side so, by definition,

. Then, taking

, dominated convergence in probability shows that the left hand side tends to

, and equation (2) follows.

Conversely, suppose that and let

be a sequence of bounded predictable processes satisfying

. Writing

, the above argument shows that

and

By dominated convergence, this tends to zero in probability as . So,

as required. ⬜

Note that, as Theorem 2 gives an `if and only if’ condition for to be

-integrable, the definition of

-integrable processes as good dominators given in these notes is precisely the correct set of processes to make this theorem hold. In fact, noting that

and

are bounded for any process

, associativity gives the following alternative criterion for

-integrability and definition of stochastic integration for unbounded integrands.

Corollary 3 Let

be a semimartingale and

be a predictable process. Then,

is

-integrable if and only if there exists a semimartingale

satisfying

and

(3) in which case

.

Proof: First, if and

then equation (3) follows from associativity of the stochastic integral. Conversely, suppose that

is a semimartingale satisfying (3) and that

. Letting

equal the integral on the left hand side of (3), associativity of integration shows that

is

-integrable and

Similarly, as is also equal to the right hand side of (3), associativity shows that

is

-integrable and

Comparing these equalities gives as required. ⬜

Stochastic integrals behave particularly well under stopping. Recall that represents a process

stopped at the time

.

Lemma 4 Let

be a semimartingale,

be

-integrable and

be a stopping time. Then, the stopped process

is also a semimartingale,

is

-integrable and

(4)

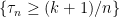

Proof: Approximating by the discrete stopping times

gives

As , bounded convergence in probability can be applied,

Associativity of the stochastic integral shows that is a semimartingale,

and, by integrating

, gives the right hand equality of (4).

Similarly, integrating with respect to

gives,

as required. ⬜

Next, the class of -integrable processes is unchanged under localization.

Lemma 5 Let

be a semimartingale. Then, a predictable process

is

-integrable if and only if it is locally

-integrable.

Proof: If is locally

-integrable then there exist stopping times

such that

. Choose bounded predictable processes

which tend to zero as

goes to infinity. Then, for any fixed

,

are dominated by

. So, equation (4) and dominated convergence in probability give

in probability as . By choosing

large,

can be made as close to 1 as required, showing that

goes to zero in probability. So, by definition,

. ⬜

A consequence of this result is the following important statement, which applies to all semimartingales; all locally bounded predictable processes are -integrable. In particular, if

is a cadlag adapted process then its left limits

give a left-continuous and adapted, hence predictable, process. It is, furthermore, locally bounded and the integral

is well defined.

A similar result as above shows that the class of semimartingales is closed under localization.

Lemma 6 A stochastic process is a semimartingale if and only if it is locally a semimartingale.

Proof: By definition, a process is locally a semimartingale if there are stopping times

such that

are semimartingales. Then,

are semimartingales. For any bounded predictable process

and

, Lemma 4 gives

In particular whenever

. So, we can define the integral with respect to

by

where the limit on the right hand side is eventually constant, with probability one. This clearly satisfies the explicit expression for elementary integrands. To show that is a semimartingale, it only remains to prove bounded convergence in probability. So, suppose that

is a uniformly bounded sequence of predictable processes. Dominated convergence in probability can be applied to the semimartingale

,

Then, by choosing large enough,

can be made as close to 1 as required, showing that

does indeed converge in probability

. ⬜

Let us now move on to the dominated convergence theorem. Although dominated convergence in probability was required by the definition of stochastic integration, convergence also holds in a much stronger sense. A sequence of processes converges ucp to a limit

if

tends to zero in probability for each

.

Theorem 7 (Dominated Convergence) Let

be a semimartingale and

be a sequence of predictable processes converging to a limit

. If the sequence is dominated by some

-integrable process

, so that

, then

and, furthermore, convergence holds in the semimartingale topology.

Proof: As semimartingale convergence implies ucp convergence, it is enough to show that converges to zero in the semimartingale topology. Choose a sequence

of elementary predictable processes. Then,

, and dominated convergence in probability gives

in probability as . By definition, this proves semimartingale convergence. ⬜

The dominated convergence theorem can be used to prove the following result stating that the jumps of a stochastic integral behave in the expected way. Recall that the jump of a cadlag process is equal to

. In these notes, when two processes are shown to be equal, this is always taken to mean that they agree up to evanescence.

Corollary 8 If

is a semimartingale and

then

(5)

Proof: Let be the set of all

-integrable processes such that equation (5) is satisfied. This contains all elementary predictable processes, by the explicit expression for the integral. Consider a sequence

which is dominated by some

and suppose that

. If it can be shown that

then the functional monotone class theorem will imply that

, as required.

By Theorem 7, converges ucp to

. Passing to a subsequence if necessary, we may suppose that, almost surely, the sample paths converge uniformly on compacts. Then, the following limits hold uniformly on compacts,

⬜

Stochastic integration preserves certain properties of processes, such as continuity and predictability.

Corollary 9 Suppose that

for a semimartingale

and

. If

is continuous (resp. predictable) then so is

.

Proof: If is continuous then equation (5) shows that

, so

is continuous.

For any cadlag process , its left-limit

is a left-continuous and adapted process which, by definition, is predictable. So,

is predictable if and only if

is. So, suppose that

is predictable. Then, (5) shows that

is also predictable. ⬜

Finally, the set of semimartingales is a vector space, and stochastic integration is linear in the integrator

.

Lemma 10 Let

be semimartingales and

be real numbers. Then,

is a semimartingale. Furthermore, any process

which is both

-integrable and

-integrable is also

-integrable and,

(6)

Proof: The integral of any bounded predictable process with respect to

can be defined by (6). This clearly satisfies the explicit expression for elementary integrands, and satisfies bounded convergence in probability, as required. So,

is a semimartingale. Now, suppose that

. If

is a sequence of bounded predictable processes tending to a limit

, then dominated convergence in probability with respect to

and

gives

in probability as . Taking

, the right hand side is zero and, by definition,

. Then, taking

, dominated convergence shows that the left hand side tends to

, giving (6). ⬜

Dear George,

Talking about the Corollary 9 here, I am wondering whether the stochastic integration preserves the α-order Holder continuity of the integrator process X. For example, consider , with V an adapted process and B a standard Brownian motion. It is well-known that almost surely, B is Holder continuous with order α ∈ (0,1/2).

, with V an adapted process and B a standard Brownian motion. It is well-known that almost surely, B is Holder continuous with order α ∈ (0,1/2).

Now my question is: is this Ito integral also Holder continuous with the same order α? If not, what additional conditions do we need to make it so? I personally think that this should depend on the properties of the process V, but can not find reference on this. A positive answer or a counter-example are both welcome!

also Holder continuous with the same order α? If not, what additional conditions do we need to make it so? I personally think that this should depend on the properties of the process V, but can not find reference on this. A positive answer or a counter-example are both welcome!

Thanks in Advance!

Rocky

Hi. No, the integral does not have to be Holder continuous. It’s getting late here, so I don’t have time to construct a detailed example. to find sufficient conditions for the stochastic integral to be Holder continuous.

to find sufficient conditions for the stochastic integral to be Holder continuous.

Just restricting to deterministic V, you can ensure that the integral fails to satisfy any given modulus of continuity. To do this, consider the integral as a time change of Brownian motion and use the law of the iterated logarithm. By a similar time-change argument, you just need to look at the modulus of continuity of

Hi George,

I am curious how you define Lebesgue Stieltjes in Lemma 1 for non-Riemann integrable integrands (can even be the classical deterministic example ). Is there a Legesque integral equivalent for the pathwise integral? Sorry if this is a very primitive question 🙂 Thanks in advance.

). Is there a Legesque integral equivalent for the pathwise integral? Sorry if this is a very primitive question 🙂 Thanks in advance.

P.S.: There seems to be a small typo in the second paragraph of the proof of theorem 2: It should say (not dX).

(not dX).

[GL: I edited your LaTeX]

Given any right-continuous finite variation function![f\colon[0,t]\to\mathbb{R}](https://s0.wp.com/latex.php?latex=f%5Ccolon%5B0%2Ct%5D%5Cto%5Cmathbb%7BR%7D&bg=ffffff&fg=000000&s=0&c=20201002) you can define the Lebesgue-Stieltjes integral

you can define the Lebesgue-Stieltjes integral

for bounded measurable , and more generally whenever

, and more generally whenever  is measurable. Here,

is measurable. Here,  is a finite signed measure on (0,t] satisfying

is a finite signed measure on (0,t] satisfying ![\int_0^t1_{(0,u]}\,df=f(u)-f(0)](https://s0.wp.com/latex.php?latex=%5Cint_0%5Et1_%7B%280%2Cu%5D%7D%5C%2Cdf%3Df%28u%29-f%280%29&bg=ffffff&fg=000000&s=0&c=20201002) for

for  , and

, and  is its variation. This is quite standard, and I’ll give you a reference when I get a moment to look it up. It’s probably easiest to show that this is well-defined for f an increasing function (and generalize by looking at the difference of increasing functions). In that case, you can write the integral as

is its variation. This is quite standard, and I’ll give you a reference when I get a moment to look it up. It’s probably easiest to show that this is well-defined for f an increasing function (and generalize by looking at the difference of increasing functions). In that case, you can write the integral as

where I have set![f^{-1}(y)=\inf\{s\in(0,t]\colon f(s)\ge y\}](https://s0.wp.com/latex.php?latex=f%5E%7B-1%7D%28y%29%3D%5Cinf%5C%7Bs%5Cin%280%2Ct%5D%5Ccolon+f%28s%29%5Cge+y%5C%7D&bg=ffffff&fg=000000&s=0&c=20201002) .

.

Hi, I was wondering, if two integrable previsable processes are indistinguishable, can we say that their integrals are equal almost surely? Don’t seem to remember that being mentioned..

Hmm, I see for indistinguishable, surely left continuous integrands this follows by step function approximation (as the statement is certainly true for simple integrands). Perhaps the full general case follows by an MCT argument…

..now having read onwards, I see that we can use the Itou isometry even for discontinuous local martingales, giving the result.

The definition of “local integrability” in Lemma 5 seems different from the one given on the “Localization”-Page where the running supremum has to be locally integrable. Does the latter refer to the finiteness of the expectations at all times and has nothing to do with stochastic integration?

“locally X-integrable” has a different meaning to “locally integrable”. The first means that there exist stopping times increasing to infinity such that

increasing to infinity such that ![1_{(0,\tau_n]}\xi](https://s0.wp.com/latex.php?latex=1_%7B%280%2C%5Ctau_n%5D%7D%5Cxi&bg=ffffff&fg=000000&s=0&c=20201002) are X-integrable. i.e.,

are X-integrable. i.e.,  is X-integrable in a local sense. In the second, integrability refers to finite expectation, and the running supremum is also used (as this behaves better under localisation then just looking at the integrability at fixed times).

is X-integrable in a local sense. In the second, integrability refers to finite expectation, and the running supremum is also used (as this behaves better under localisation then just looking at the integrability at fixed times).

Hello, thank you for the post. Could you provide any sources?

The results here are all fairly standard. I’ll post some of my references when I have a moment, and I think I’ll create a page on this blog to list my stochastic calculus references.

Minor correction: in the first display after Eq. (4), should perhaps be replaced by

should perhaps be replaced by  .

.

Hi Richard. You are correct – I fixed. Thanks!

I have a question about the dominated convergence theorem as stated here (and elsewhere).

It is unclear to me whether the limit $\xi$ is assumed to be predictable or not. If it is not predictable (and if the predictability does not follow from the assumptions) then one must extend the concept of the integral for such processes.

A pointwise limit of (real-valued) measurable functions is itself measurable, so yes the limit must be predictable.

I was suspecting this was the answer but because we are dealing with an underlying product space \Omega\times\mathbb{\R} I did not quite see it, but I do now. Thank you!