Now that it has been shown that stochastic integration can be performed with respect to any local martingale, we can move on to the following important result. Stochastic integration preserves the local martingale property. At least, this is true under very mild hypotheses. That the martingale property is preserved under integration of bounded elementary processes is straightforward. The generalization to predictable integrands can be achieved using a limiting argument. It is necessary, however, to restrict to locally bounded integrands and, for the sake of generality, I start with local sub and supermartingales.

Theorem 1 Let X be a local submartingale (resp., local supermartingale) and  be a nonnegative and locally bounded predictable process. Then,

be a nonnegative and locally bounded predictable process. Then,  is a local submartingale (resp., local supermartingale).

is a local submartingale (resp., local supermartingale).

Proof: We only need to consider the case where X is a local submartingale, as the result will also follow for supermartingales by applying to -X. By localization, we may suppose that  is uniformly bounded and that X is a proper submartingale. So,

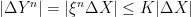

is uniformly bounded and that X is a proper submartingale. So,  for some constant K. Then, as previously shown there exists a sequence of elementary predictable processes

for some constant K. Then, as previously shown there exists a sequence of elementary predictable processes  such that

such that  converges to

converges to  in the semimartingale topology and, hence, converges ucp. We may replace

in the semimartingale topology and, hence, converges ucp. We may replace  by

by  if necessary so that, being nonnegative elementary integrals of a submartingale,

if necessary so that, being nonnegative elementary integrals of a submartingale,  will be submartingales. Also,

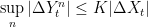

will be submartingales. Also,  . Recall that a cadlag adapted process X is locally integrable if and only its jump process

. Recall that a cadlag adapted process X is locally integrable if and only its jump process  is locally integrable, and all local submartingales are locally integrable. So,

is locally integrable, and all local submartingales are locally integrable. So,

is locally integrable. Then, by ucp convergence for local submartingales, Y will satisfy the local submartingale property. ⬜

For local martingales, applying this result to  gives,

gives,

Theorem 2 Let X be a local martingale and  be a locally bounded predictable process. Then,

be a locally bounded predictable process. Then,  is a local martingale.

is a local martingale.

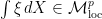

This result can immediately be extended to the class of local  -integrable martingales, denoted by

-integrable martingales, denoted by  .

.

Corollary 3 Let  for some

for some  and

and  be a locally bounded predictable process. Then,

be a locally bounded predictable process. Then,  .

.

Continue reading “Preservation of the Local Martingale Property” →

and

be finite measure spaces, and

be a bounded

-measurable function. Then,

-measurable,

-measurable, and,