A stochastic differential equation, or SDE for short, is a differential equation driven by one or more stochastic processes. For example, in physics, a Langevin equation describing the motion of a point in n-dimensional phase space is of the form

| (1) |

The dynamics are described by the functions , and the problem is to find a solution for X, given its value at an initial time. What distinguishes this from an ordinary differential equation are random noise terms

and, consequently, solutions to the Langevin equation are stochastic processes. It is difficult to say exactly how

should be defined directly, but we can suppose that their integrals

are continuous with independent and identically distributed increments. A candidate for such a process is standard Brownian motion and, up to constant scaling factor and drift term, it can be shown that this is the only possibility. However, Brownian motion is nowhere differentiable, so the original noise terms

do not have well defined values. Instead, we can rewrite equation (1) is terms of the Brownian motions. This gives the following SDE for an n-dimensional process

| (2) |

where are independent Brownian motions. This is to be understood in terms of the differential notation for stochastic integration. It is known that if the functions

are Lipschitz continuous then, given any starting value for X, equation (2) has a unique solution. In this post, I give a proof of this using the basic properties of stochastic integration as introduced over the past few posts.

First, in keeping with these notes, equation (2) can be generalized by replacing the Brownian motions and time t by arbitrary semimartingales. As always, we work with respect to a complete filtered probability space

. In integral form, the general SDE for a cadlag adapted process

is as follows,

| (3) |

Here, are semimartingales. For example, as well as Brownian motion, Lévy processes are often also used. If

are

-measurable random variables, they simply specify the starting value

. More generally, in (3), we can allow N to be any cadlag and adapted process, which acts as a `source term’ in the SDE. Furthermore, rather than just being functions of X at time t, we allow

to be a function of the process X at all times up to t. For example, it could depend on it maximum so far, or a running average. In that case, we instead impose a functional Lipschitz condition as follows. The notation

is used for the maximum of a process X, and

denotes the set of all cadlag and adapted n-dimensional processes.

- (P1)

is a map from

to the set

of predictable and

–integrable processes.

- (P2) There is a constant K such that

for all times

and

.

The special case where is a Lipschitz continuous function of

clearly satisfies both (P1) and (P2). The uniqueness theorem for SDEs with Lipschitz continuous coefficients is as follows.

Theorem 1 Suppose that

satisfy properties (P1), (P2). Then, there is a unique

satisfying SDE (3).

Recall that, throughout these notes, we are identifying any processes which agree on a set of probability one so that, here, uniqueness refers to uniqueness up to evanescence.

Simple examples of SDEs with Lipschitz coefficients include linear equations such as mean reverting Ornstein-Uhlenbeck processes, geometric Brownian motion and Doléans exponentials.

After existence and uniqueness, an important property of solutions to SDEs is stability. That is, small changes to the coefficients only have a small effect on the solution. The notion of a `small change’ first needs to be made precise by specifying a topology on these terms. For the coefficients , uniform convergence on bounded sets will be used. Given maps

for r=1,2,…, I will say that

uniformly on bounded sets if, for each

tends to zero as r goes to infinity. For the solutions X and source term N, which are cadlag processes, the appropriate topology is that of convergence uniformly on compacts in probability (ucp convergence). Recall that if

in probability for each t. The stability of solutions to SDEs under small changes to the coefficients can then be stated precisely.

Theorem 2 Suppose that

satisfy properties (P1), (P2) and let X be the unique solution to the SDE (3) given by Theorem 1. Suppose furthermore that

and

are sequences such that

and

uniformly on bounded sets. Then, any sequence of processes

satisfying

converges ucp to X as r goes to infinity.

Equivalently, the map from to the solution X satisfying SDE (3) is continuous under the respective topologies.

Proof of Existence and Uniqueness

Throughout this subsection, assume that N is a cadlag adapted n-dimensional process, are semimartingales and that

satisfy properties (P1), (P2) above. To simplify the notation a bit, for any

, write

to denote the following n-dimensional process,

So, and equation (3) just says that X=F(X). Existence and uniqueness of solutions to this SDE is equivalent to F having a unique fixed point.

The `standard’ proof for Lipschitz continuous coefficients, at least when the driving semimartingales are Brownian motions, makes use of the Ito isometry to construct a norm on the cadlag processes under which F is a contraction. The result then follows from the contraction mapping theorem. Although this method can also be applied for arbitrary semimartingales, it is more difficult and some rather advanced results on semimartingale decompositions are required. However, the aim here is to show how existence and uniqueness follows for all semimartingales as a consequence of the basic properties of the stochastic integration, so a different approach is taken. Furthermore, the method used of constructing discrete approximations making the error term X-F(X) small is closer to methods which might be employed in practice when explicitly simulating solutions to (3).

First, the following inequality bounds the values of a positive semimartingale X in terms of the maximum value of the stochastic integral .

Lemma 3 Let X be a positive semimartingale. Then,

Proof: For any continuous decreasing function ,

is a cadlag decreasing process and integration by parts gives the following,

It is easily seen that is constant over any interval

on which

and, therefore, the integral above with respect to

is zero over such intervals. So, we can replace the integrand

by

and, again applying integration by parts,

| (4) |

As the process is cadlag, there must exist an such that either

or

. In the first case, evaluating (4) at time s gives

The final equality follows from the fact that must be constant on the interval [s,t]. Similarly, in the case where

, the same inequality results from evaluating (4) at time s-.

We are only interested in the special case . The next step is to apply the generalized Ito formula to

.

The inequality follows from concavity of the logarithm and, putting

shows that the summation above is non-positive,

⬜

The general idea behind the proofs given here is that if X,Y are processes such that the error terms X-F(X) and Y-F(Y) to the SDE (3) are small then

The Lipschitz property implies that the integrand is bounded by

, and the following result will be used to show that

.

Lemma 4 Let

be a sequence of n-dimensional processes satisfying

for predictable processes

and cadlag adapted processes

converging ucp to zero as r goes to infinity. Then,

.

Proof: By definition of ucp convergence, for any the limit

holds as r goes to infinity. Therefore, there exists a sequence

such that

. So, by stopping the processes as soon as

, we may assume that

for all r.

Now, writing ,

for predictable processes , which are uniformly bounded by K. Applying Lemma 3 to the nonnegative semimartingale

,

However, applying integration by parts to ,

The inequality shows that the integrands on the right hand side of the above expression are all bounded by

in the first integral and by

in the second integral. Hence, by dominated convergence, if

is a sequence of real numbers then

in probability as . This shows that the sequence

is bounded in probability as

and, by exponentiating,

is bounded in probability. However,

and therefore,

. Finally,

as required. ⬜

Now, with the aid of the previous result, it is possible to construct approximate solutions to the SDE (3) making the error X-F(X) as small as we like.

Lemma 5 For any

there exists an

satisfying

.

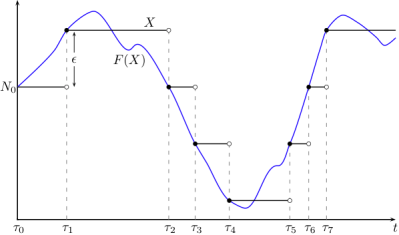

Proof: The idea is to define X inductively as a piecewise constant function across finite time intervals, while adjusting the time step sizes to force the error to remain within the tolerance . To do this, define stopping times

and processes

by

and, for

,

Then, increases to some limit

as r goes to infinity, and we can define the process X on the time interval

by

whenever

. By definition,

on

(see Figure 1). To complete the proof, it just needs to be shown that

almost surely.

First, we show that X cannot explode at any finite time. The process is bounded by

, and choosing a sequence of real numbers

, the stopped processes

can be written as,

The final term on the right hand side tends ucp to zero as r goes to infinity, and the Lipschitz property implies that is bounded by

. Lemma 4 then gives

. So, for any time

, the sequence

is bounded in probability and, therefore,

is almost surely bounded on the interval

whenever

. In particular,

is a locally bounded and hence is an X-integrable process. So, whenever

, the limit

exists with probability one.

However, from the definition of X,

contradicting the convergence of . So

almost surely, as required. ⬜

Next, if we construct a sequence of processes such that the error terms

go to zero, then they necessarily converge to the solution to the SDE.

Lemma 6 Suppose that

satisfy

as r tends to infinity. Then,

for some cadlag adapted process X satisfying X=F(X).

Proof: Setting and

then

and as r and s go to infinity. Furthermore, by Lipschitz continuity,

. So, Lemma 4 says that

as r,s go to infinity.

By completeness under ucp convergence, for some

. Passing to a subsequence if necessary, we may suppose that

tends to X uniformly on bounded intervals. So, dominated convergence gives

as required. ⬜

Existence and uniqueness of solutions, as stated by Theorem 1, now follows from the previous lemmas.

Theorem 7 There is a unique

satisfying F(X)=X.

Proof: First, for uniqueness, suppose that and

are two such solutions. Forming the infinite sequence by alternating these,

for r even and

for r odd, Lemma 6 says that this is convergent, so X=Y.

To prove existence, note that Lemma 5 implies the existence of a sequence of cadlag processes satisfying

and, then, Lemma 6 says that

for some solution to X=F(X). ⬜

Finally, the proof of Theorem 2 also follows from the results above.

Theorem 8 Suppose that

satisfy properties (P1), (P2) and let X be the unique solution to the SDE (3) given by Theorem 1. Suppose furthermore that

and

are sequences such that

and

uniformly on bounded sets. Then, any sequence of processes

satisfying

converges ucp to X as r goes to infinity.

Proof: For any , let

be the first time at which

,

As is uniformly bounded by L over the interval

, dominated convergence implies that the first term inside the final parenthesis converges ucp to zero as r goes to infinity. Also, by Lipschitz continuity,

is bounded by

. So, applying Lemma 4 to the above expression gives

. It remains to show that the non-stopped processes

also converge ucp to zero.

Fix any . Note that if

and

then

.

By choosing L large, this can be made as small as we like. Finally,

By ucp convergence of the stopped processes the first term on the right hand side vanishes as r goes to infinity, and the second term can be made as small as we like by choosing L large. Therefore,

in probability, as required. ⬜

Dear George, in lemma 4, the stated result implies that

in lemma 4, the stated result implies that  if

if  . I understand the technical steps in the proof, but I was wondering whether there is an intuitive explanation to this result.

. I understand the technical steps in the proof, but I was wondering whether there is an intuitive explanation to this result.

I read through the proof carefully and understand the technical steps. But I didn’t yet grasp the intuition behind Lemma 4 which seems to be the key to the whole proof.

For instance, setting

Thanks!

Please ignore my example for $N^r=0$, I just figured out what I was missing in my understanding of it…

Dear George,

I have a small question regarding what you called “The `standard’ proof for Lipschitz continuous coefficients, at least when the driving semimartingales are Brownian motions”. Do you know if there is any research in this subject in the direction of trying to use different fixed point theorems instead of the Banach one (like Schauder, Leray-Schauder, or even some cone-compression/expansion theorems)? Of course you can lose uniqueness this way, but instead of having Lipschitz coefficients, some sort of boundedness can be enough as well. Furthermore, in the cone-compression/expansion case, you can also have localization of solutions. The intuition behind this question is that I’ve seen these methods used for deterministic ODEs (sometimes even PDEs), and there are cases when they yield better results than the Banach fixed point theorem, therefore the research in this direction is quite well motivated. I though you would be the right person to ask this question.

Thank you in advance.

Best regards.

Thank you George for these useful notes. I’ve never seen the basic theory of SDEs presented at this level of generality before in any book. Is there a reference you based this on?

Sorry, I missed this comment when it was posted. In case anyone is interested: No, there is no reference that this is based on. There are various standard ways of proving existence and uniqueness (such as Picard iteration), but they are all rather complex or require more properties of semimartingales than I wanted to use here. I came up with this proof instead, which only makes use of the first properties of stochastic integration with the simple axiomatic definition used in these notes.

Dear George,

I think it would be nicer to not use the generalized Ito formula in the proof of lemma 3. This is easily achievable because for every right continuous and increasing positive function we have the following:

we have the following:

Ok, thanks, that does indeed work. I will consider updating. Btw, that’s a very ambitious latex formula for a comment. I fixed the parse failure and aligned the equations.

Dear George,

There is something tha I don’t understand in the proof of lemma 5. During the proof, after you construct the processes you define the process

you define the process  , at that point we don’t know that

, at that point we don’t know that  is cadlag. But later in the proof you use the process

is cadlag. But later in the proof you use the process  , but the functions

, but the functions  have domain in the cadlag processes, isn’t a problem at that point ?

have domain in the cadlag processes, isn’t a problem at that point ?

I don’t think it is a problem, just the explanation should be tidied up. The point is that we can define F(X) over the interval [0,tau), even though we do not yet know that X has a left limit at tau. This is because F is backwards looking, F(X)_t only depends on X at times before t. More precisely, we actually define a process which is defined on each interval [0,tau_r] as equalling F(X^r), which I denoted as F(X).

The whole proof could be tidied. We are really only constructing a single process X, but doing this by inductively extending it over a sequence of time steps. Due to notation issues, I denoted each of these newly extended processes as a sequence of processes, rather than just one process. Also, extending across each time step involves evaluating F(X), but it is well defined as the value of F(X) only depends on the values of X already constructed.

Hi George at the end of the proof of Lemma 5, you write ||X_{\tau_r}-X_{\tau_{r-1}}|| = ||X_{\tau_r}^r – F(X^r)_{\tau_r}|| from the definition of X, but I am not able to get this equality from the definition of X here. Could you explain how you get this in more detail to me?

This should follow quite quickly from the definitions, since X^r equals X on the relevant ranges. I don’t have time to go into details now, but maybe the diagram helps. Note, X^r equals X before time tau_r, and is constant over t >= tau_{r-1}.

Oh thank you that clears it up. There is still one question I can’t answer confidently. Why is it true that \tau_{r+1}>\tau_r?

Is this why \tau_{r+1}>\tau_r?

So the definition of \tau_{r+1} is the first time since \tau_r that X^{r+1} and F(X^{r+1}) differ by at least \epsilon. At the time \tau_r, X^{r+1} is just F(X^r)_{\tau_r}. But F(X^{r+1})_{\tau_r} is N_{\tau_r} + (\int a_{ij}(X^{r+1}) dZ)_{\tau_r}. The N components cancel out since they are the same. The problem is the stochastic integral part. If I understood correctly your assumption on a_{ij}, they only depend on time leading up to t but not including t, so since X^{r+1} is equal to X^r for times t<\tau_r, the stochastic integrals must also be equal hence at the time \tau_r, X^{r+1} and F(X^{r+1}) have difference 0. Since they are both right continuous processes, they must differ by at least \epsilon at a time greater than \tau_r, or never, in which case \tau_{r+1}=\infty.

Yes, although more succinctly, F(X)_t only depends on X_s for times s < t. Hence, .

.

Again though, Figure 1 should help. X is piecewise constant and jumps to equal F(X) whenever it deviates by epsilon from it. As it equals F(X) at that point, there will be some time before it jumps again.

The fact that F(X) only depends on X at previous times, means that making X jump does not change the value of F(X) at that or any prior time. This is important to the construction.